Introduction

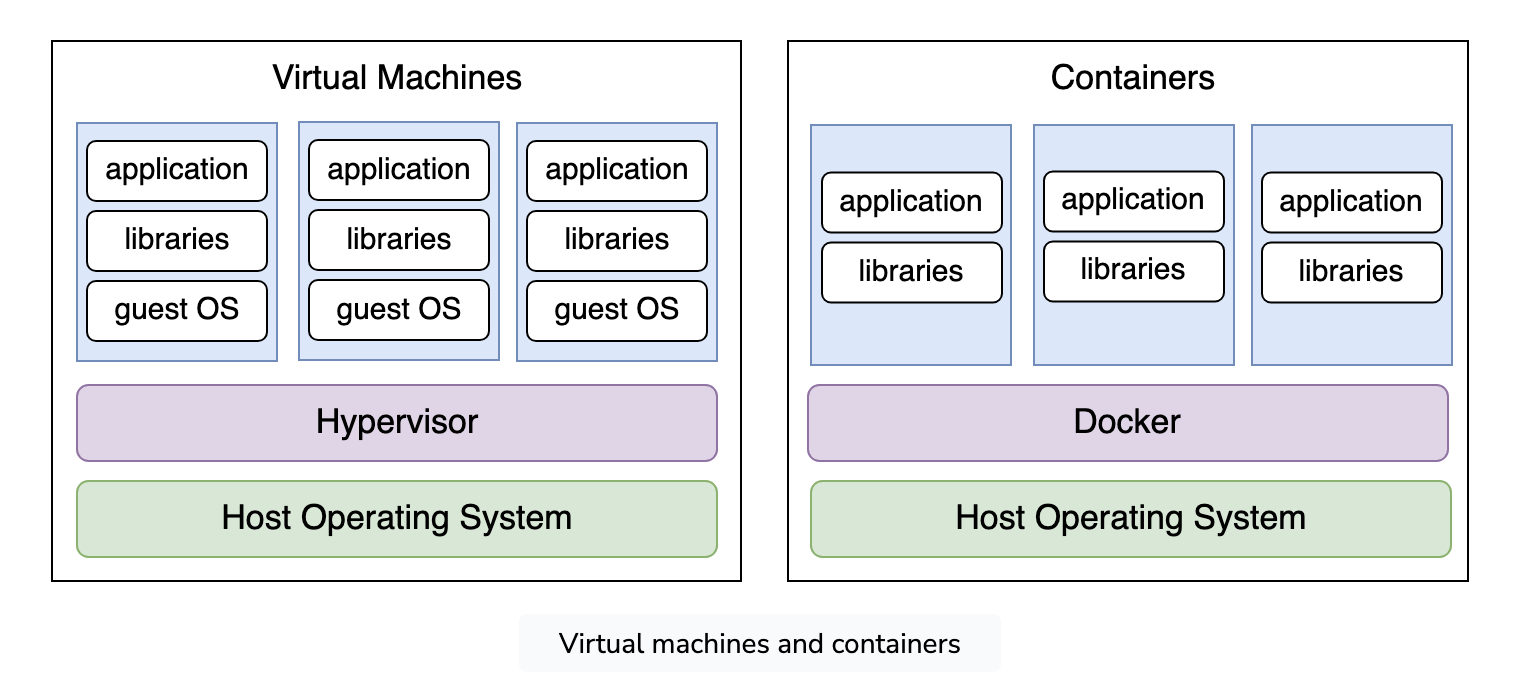

Traditional hardware virtualization is based on launching multiple guest systems on a common host system, Docker applications are run as isolated processes on the same system with the help of containers. This is called container-based virtualization, also referred to as operating-system-level virtualization.

Figure 1 : Virtual Machine Vs Container

Figure 1 : Virtual Machine Vs Container

While each virtual machine launches its own operating system, Docker containers share the core of the host system

One big advantage of container-based virtualization is that applications with different requirements can run in isolation from one another without requiring the overhead of a separate guest system. Additionally, with containers, applications can be deployed across platforms and in different infrastructures without having to adapt to the hardware or software configurations of the host system.

it helps developers package their applications into containers.

Benifits

The benefits of using Docker are that it makes it easier to move software between different computers and servers, and it helps ensure that the software runs the same way every time, no matter where it is running. It can also help reduce conflicts between different software packages on the same computer, since each package runs in its own container.

When we run and deploy any application or a machine learning model or application or a database or some third parties package they need some libraries and dependencies, Working on multiple applications requires different versions of dependencies can lead to a dependencies conflict.

This problem could be solved either by using separate machines or by packaging all the dependencies with their application.

| Aspect | Docker | Virtual Machines |

|---|---|---|

| Design | Uses containers, sharing OS kernel, lightweight | Uses hypervisors, separate OS for each VM, more overhead |

| Efficiency | Efficient, shares host OS resources | Less efficient, requires resources for each VM |

| Performance | Generally faster and lighter | Can be slower with more overhead |

| Isolation | Process-level isolation with shared OS kernel | Full isolation with separate OS for each VM |

| Portability | Portable, encapsulates app and dependencies | Less portable, affected by hypervisor and OS differences |

| Startup Time | Quick startup due to shared OS kernel | Slower startup, involves booting complete OS |

| Use Cases | Deploying user authentication (e.g., Keycloak) | Running older software (e.g., Windows XP) for legacy applications. Strong isolation for sensitive applications like financial systems (e.g., Swift). |

| Overhead | Low overhead, shared host OS kernel | Higher overhead, runs complete OS instances |

A hypervisor is like a conductor for virtual machines, enabling a single computer to run multiple operating systems independently.

Docker key concepts

1) Images

In layman terms, Docker Image can be compared to a template which is used to create Docker Containers. So, these read-only templates are the building blocks of a Container. You can use docker run to run the image and create a container.

Docker Images are stored in the Docker Registry. It can be either a user’s local repository or a public repository like a Docker Hub which allows multiple users to collaborate in building an application.

A Docker image is a read-only template that defines the application, its dependencies, and the runtime environment. Images are used to create Docker containers.

1

2

3

4

5

# Pull an image from Docker Hub

docker pull nginx

# List all images on the local machine

docker images

In simple terms, a Docker Image is like a template for creating Docker Containers. These read-only templates define an application, its dependencies, and the runtime environment. You use “docker run” to turn an image into a container. Docker Images are stored in a Docker Registry, which can be local or public like Docker Hub, enabling collaboration among users in building applications.

2) Containers

A Docker container is a running instance of a Docker Image as they hold the entire package needed to run the application. So, these are basically the ready applications created from Docker Images which is the ultimate utility of Docker.

Containers are like small, standalone, and portable environments that include everything needed to run an application, such as the application code, dependencies, and runtime. Think of it like a “package” for an application.

-

Application Code: This is the actual program or software that performs a specific task. It includes all the code that was written by the developer to create the application.

-

Dependencies: These are other software components that the application needs to function properly. Dependencies can include libraries, frameworks, and other software packages that the application relies on. e.g. JDK

-

Runtime: This is the environment in which the application runs. It includes the software and hardware components that the application needs to operate, such as the operating system, system libraries, and any other services or components required to run the application. e.g. JRE

1

2

3

4

5

6

7

8

9

10

# Create a new container from an image

docker run -it --name mycontainer nginx

# List all running containers

docker ps

# Start, Stop and remove a container

docker start mycontainer

docker stop mycontainer

docker rm mycontainer

A Docker container is a live execution of a Docker Image, encapsulating everything required to run an application. It serves as a self-contained and portable environment, holding the application code, dependencies, and runtime. Essentially, containers act as ready-to-use applications created from Docker Images, offering a convenient and efficient way to deploy and run software.

3) Dockerfile

A Dockerfile is a text file that contains instructions for building a Docker image. It specifies the base image, any additional software dependencies, and how to configure the container.

So, Docker can build images automatically by reading the instructions from a Dockerfile. You can use docker build to create an automated build to execute several command-line instructions in succession.

-

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

# Use an official Python runtime as a parent image FROM python:3.8-slim-buster # Set the working directory to /app WORKDIR /app # Copy the current directory contents into the container at /app COPY . /app # Install the required packages RUN pip install --no-cache-dir -r requirements.txt # Make port 80 available to the world outside this container EXPOSE 80 # Define the command to run the application CMD ["python", "app.py"]

-

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

# Specify the base image FROM node:14-alpine # Set the working directory WORKDIR /app # Copy the package.json and package-lock.json files to the container COPY package*.json ./ # Install the dependencies RUN npm install # Copy the rest of the application files to the container COPY . . # Expose port 3000 for the application EXPOSE 3000 # Start the application CMD [ "npm", "start" ]

-

1 2 3 4 5

# Build a Docker image from the current directory and tag it as "myimage" docker build -t myimage . # Add a new tag to the existing "myimage" Docker image with the name "dockerhub4manohar/myimage" docker tag myimage dockerhub4manohar/myimage

A Dockerfile is a text file with instructions for constructing a Docker image, specifying the base image, additional dependencies, and container configuration. Docker can automate image creation by reading these instructions, using “docker build” to execute a series of command-line instructions in sequence.

4) Registry (Docker Hub)

A Docker registry is a repository for storing and distributing Docker images. Docker Hub is a popular public registry, but you can also set up your own private registry.

1

2

3

4

5

6

7

8

9

# Log in to a Docker registry

docker login dockerhub4manohar

# Push an image to the registry

docker tag myimage dockerhub4manohar/myimage

docker push dockerhub4manohar/myimage

# Pull an image from the registry

docker pull dockerhub4manohar/myimage

A Docker registry is a storage and distribution hub for Docker images. While Docker Hub is a widely used public registry, users can also establish their private registry for image storage and sharing.

5) Compose

Docker Compose is a tool for managing multi-container Docker applications. It enables users to specify services, networks, and volumes for an application in a single YAML file, simplifying the configuration and deployment process.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# Define a multi-container application using Docker Compose

version: '3'

services:

web:

build: .

ports:

- "5000:5000"

depends_on:

- db

db:

image: postgres

environment:

POSTGRES_PASSWORD: example

Summary

-

Traditional hardware virtualization runs multiple guest systems on a single host, but Docker uses container-based virtualization, enabling applications to run as isolated processes on the same system. Unlike virtual machines, Docker containers share the host system’s core, allowing diverse applications to run independently across platforms.

-

Developers benefit from easily packaging applications into containers stored in Docker Registries like Docker Hub.

-

Docker containers, live executions of Docker Images, offer portable, self-contained environments for efficient software deployment.

-

Dockerfile provide instructions for building Docker images, automating the creation process.

-

Docker Compose simplifies managing multi-container applications by specifying services, networks, and volumes in a single YAML file.